Download Semantic Search demo

This package lets new users try and evaluate the semantic search functionality on their data, privately.

No connection to the internet is needed for the operation.

Hardware requirements

recommended: Intel Core i7 or better, 10 GB RAM, 26 GB storage (SSD preferred)

You have two options to choose from:

- Docker image

- Virtual Appliance with the docker image already imported on an Ubuntu-based Linux operating system with a GUI.

Supported languages

Supported languages

| ISO | Name | ISO | Name | ISO | Name |

|---|---|---|---|---|---|

| af | Afrikaans | ht | Haitian_Creole | pt | Portuguese |

| am | Amharic | hu | Hungarian | ro | Romanian |

| ar | Arabic | hy | Armenian | ru | Russian |

| as | Assamese | id | Indonesian | rw | Kinyarwanda |

| az | Azerbaijani | ig | Igbo | si | Sinhalese |

| be | Belarusian | is | Icelandic | sk | Slovak |

| bg | Bulgarian | it | Italian | sl | Slovenian |

| bn | Bengali | ja | Japanese | sm | Samoan |

| bo | Tibetan | jv | Javanese | sn | Shona |

| bs | Bosnian | ka | Georgian | so | Somali |

| ca | Catalan | kk | Kazakh | sq | Albanian |

| ceb | Cebuano | km | Khmer | sr | Serbian |

| co | Corsican | kn | Kannada | st | Sesotho |

| cs | Czech | ko | Korean | su | Sundanese |

| cy | Welsh | ku | Kurdish | sv | Swedish |

| da | Danish | ky | Kyrgyz | sw | Swahili |

| de | German | la | Latin | ta | Tamil |

| el | Greek | lb | Luxembourgish | te | Telugu |

| en | English | lo | Laothian | tg | Tajik |

| eo | Esperanto | lt | Lithuanian | th | Thai |

| es | Spanish | lv | Latvian | tk | Turkmen |

| et | Estonian | mg | Malagasy | tl | Tagalog |

| eu | Basque | mi | Maori | tr | Turkish |

| fa | Persian | mk | Macedonian | tt | Tatar |

| fi | Finnish | ml | Malayalam | ug | Uighur |

| fr | French | mn | Mongolian | uk | Ukrainian |

| fy | Frisian | mr | Marathi | ur | Urdu |

| ga | Irish | ms | Malay | uz | Uzbek |

| gd | Scots_Gaelic | mt | Maltese | vi | Vietnamese |

| gl | Galician | my | Burmese | wo | Wolof |

| gu | Gujarati | ne | Nepali | xh | Xhosa |

| ha | Hausa | nl | Dutch | yi | Yiddish |

| haw | Hawaiian | no | Norwegian | yo | Yoruba |

| he | Hebrew | ny | Nyanja | zh | Chinese |

| hi | Hindi | or | Oriya | zu | Zulu |

| hmn | Hmong | pa | Punjabi | ||

| hr | Croatian | pl | Polish |

Option A: Docker image

Download and getting started

Choose CPU or if you have a machine with GPU, choose the GPU (CUDA) version. GPU version is approximately 7x faster than the CPU version. The time saving of faster processing depends on the amount of data you want to index yourself.

For example, a transcript of 30-minute long conversation has been indexed in 3 seconds on GPU, and in 20 seconds on CPU.

For the Semantic search itself, the CPU version performs just as fast as the GPU version (tested on an index coming from 120 hours of audio transcripts).

Getting started with the docker image

The following Readme.md is part of the docker image too.

Semantic search

Phonexia’s text-embedding based semantic search project demo

Prerequisites

- installed Docker

- 25 GB of free HDD space

- 8 GB of RAM

- basic experience with using Docker images

Running in Docker

First you’ll need to load CPU/GPU image from provided tar file(s).

docker load --input phonexia_semantic_search_<cpu/cuda>_<hash>.tar.gz

Assuming that you have all the necessary data (textual documents) in the folder <your_data_directory> you may then mount it via -v <your_data_directory>:/home/data to /home/data folder in Docker container.

We suggest to run the container in interactive (-it) mode to act as normal terminal.

docker run -it -v <your_data_directory>:/home/data phonexia_semantic_search_cpu

To run with GPU support use image with _cuda suffix together with --gpus all. This requires NVIDIA Container Toolkit as well properly setup NVIDIA driver.

docker run -it --gpus all -v <your_data_directory>:/home/data phonexia_semantic_search_cuda

Functionality

For the purposes of this demo we propose using two scripts: indexer.py and searcher.py.

indexer.py is intended to prepare semantic vector representation of presented text documents – so called index

searcher.py provides minimal command-line like UI for searching through the indexed documents

Indexing document collection

To test that indexing is working you may index some example data (copied from ISW ):

python3 indexer.py --document_list /home/example_data/example_isw_10_document_list.txt --output_index_root_dir /home/indices

Then you may identify the index folder on stdout: e.g. Output index directory: /home/indices/MODEL_1.0.0__DATA_example_isw_10_document_list.txt_TIME_2023-04-19--13-46-24--360

This folder is important, because it’s used as an input for searcher.py script that does actual searching.

Simplest possible way to index your own data in Docker:

python3 indexer.py --document_list <document_list> --output_index_root_dir <output_dir>

Where <document_list> e.g. /home/documents.txt may be a plain list of full paths to *.txt documents in UTF-8 encoding. Each document should contain relatively short lines (~sentences) and not long paragraphs on one line – these would get truncated during the indexing process.

Example content of document list:

/home/data/document_0001.txt

/home/data/document_0003.txt

/home/data/document_0004.txt

Each document may also have metadata associated with it, these are textual and specified after the space symbol in <document_list>

Example content of document list with metadata:

/home/data/meeting_ashari_bago.txt STT transcript of meeting between bosses Ashari and Bago from 17.3.2021

/home/data/doc_twitter.1234.txt Twitter posts related to eventful event

In this case metadata (e.g. STT transcript of meeting between bosses Ashari and Bago from 17.3.2021) can be later displayed via the searcher.py script.

In a CUDA supporting container you may still run indexing on CPU by specifying --device cpu option to indexer.py script.

Currently there’s no UI to append documents to existing index, or merge multiple indices together. Nevertheless it’s not a technical problem to add this feature in newer version of the Semantic search.

Searching document collection index

To test that searcher.py is working you may use the provided index on the small example data’s index directory. E.g.:

python3 searcher.py --index /home/indices/MODEL_1.0.0__DATA_example_isw_10_document_list.txt_TIME_2023-04-19--13-46-24--360

If you want to search over your data you must provide an index directory created with the indexer.py script:

python3 searcher.py --index <index_dir>

Where <index_dir> is the output directory of indexer (e.g. /home/indices/MODEL_1.0.0__DATA_example_isw_10_document_list.txt_TIME_2023-04-19--13-46-24--360)

This command starts the UI for searching over index with its own help inside the UI.

In a CUDA supporting container you may still run indexing on CPU by specifying --device cpu option to searcher.py script.

Troubleshooting

indexer.py script may run into memory issues with very long documents during indexing. In that case try to lower the value for parameter --batch_size see python3 indexer.py -h

If that’s not sufficient, try to lower the value for parameter --max_sentence_length together with that, preferably with --auto_split feature on (see next paragraph). Note: Lowering only --max_sentence_length may lead to removal of parts of the sentences in case your documents contain long lines.

indexer.py will print warning in case length of line exceeds model capabilities. In that case you may correct your data before indexing or use –auto_split parameter (see script’s help). Note that --auto_split may incorrectly separate sentence inside the unlikely type of shortcuts (e.g. “N. A. S. A”) or other unusual data.

The CUDA version and its cooperation with Docker may be an issue where we so far suggest running on CPU, especially for small amount of data. Indexing around 1000 lines of text documents should be under one minute even when running on CPU.

Disclaimer

These scripts are written as a proof of concept rather than production ready solution.

Feedback

We value your feedback and would love to hear from you! Please don’t hesitate to reach out to us via email at [email protected] with any comments, suggestions, or questions you may have about this project. Your feedback is greatly appreciated.

Option B: Virtual Appliance

Download and get started

The Virtual Appliance OVA already contains the CPU docker image imported and configured. You only have to import the OVA into VirtualBox or VMware.

Getting started

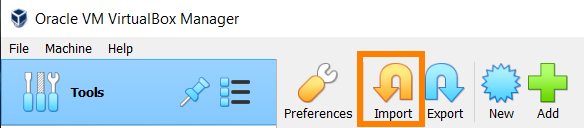

In order to start using the OVA package, simply import it to VirtualBox manager (Free download from https://www.virtualbox.org/wiki/Downloads Make sure to use version 7.x)

After the import is complete, simply start the Phonexia Semantic Search Virtual machine and let it boot.

When prompted for a password, type phonexia

NOTE: search is initiated after submitting an empty line

FAQ “Why is the search not initiated?“

Note: the search can contain multiple queries, each on a separate line. For example, to search all the cases related to vacation, you chose to search for three queries:

- I bought plane tickets

- holiday

- i am not going to work tomorrow

You simply type the query in – one after another, submitting each one with the Return key.

Once you submit an empty line, the command is initiated. See line 10 – after this, the search started.

phxadmin@phxadmin-VirtualBox:~/Desktop$ searcher [sudo] password for phxadmin:phonexia Starting UI... Loading index... Running on device 'cpu' Mode: "semantic"; Enter search query (or ":q" to quit, or ":h" for help): I bought plane tickets holiday i am not going to work tomorrow ↵ Searching... ---------------- RESULTS: -------------------------------- score: 0.6183 id: 0198 txt: i'm going to call them before i leave for work tomorrow and and maybe... score: 0.6162 id: 0061 txt: as of tomorrow i'm going to have on my computer commercial software... score: 0.6036 id: 0162 txt: but i'm listen i'm going to crash 'cause i gotta get to work tomorrow... score: 0.5926 id: 0213 txt: what time do you go to work tomorrow score: 0.5881 id: 0023 txt: yeah well that's what i'm gonna do i'm going into the office tomorrow... score: 0.5746 id: 0093 txt: you know people have to work tomorrow drifted work tomorrow score: 0.5744 id: 0146 txt: tonight i do not have to go to work tonight or tomorrow night so . . .

Feedback

We value your feedback and would love to hear from you! Please don’t hesitate to reach out to us via email at [email protected]

with any comments, suggestions, or questions you may have about this project. Your feedback is greatly appreciated!